StableMDS paper accepted to AISTATS2025

The “StableMDS” paper has been accepted to AISTATS2025

Our paper has been accepted to the 28th International Conference on Artificial Intelligence and Statistics (AISTATS2025), one of the top-ranked conferences in the machine learning field.

-

StableMDS: A Novel Gradient Descent Method for Stabilizing and Accelerating Weighted Multidimensional Scaling

- Author: Zhongxi Fang (D2), Xun Su (D2), Tomohisa Tabuchi (M2), Jianming Huang (D3), and HK

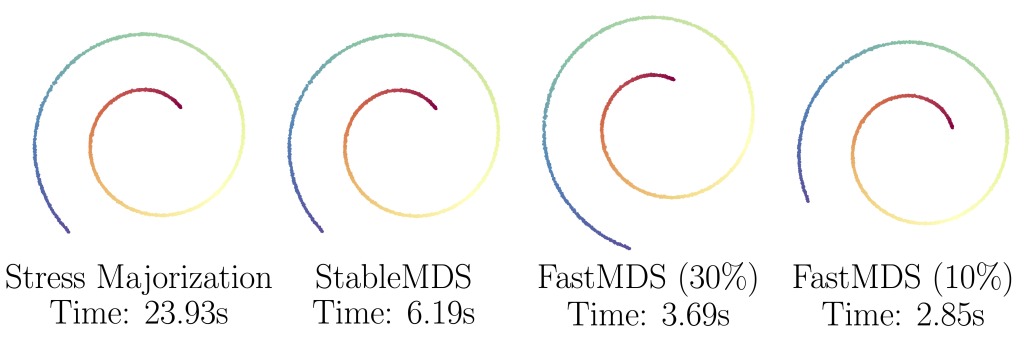

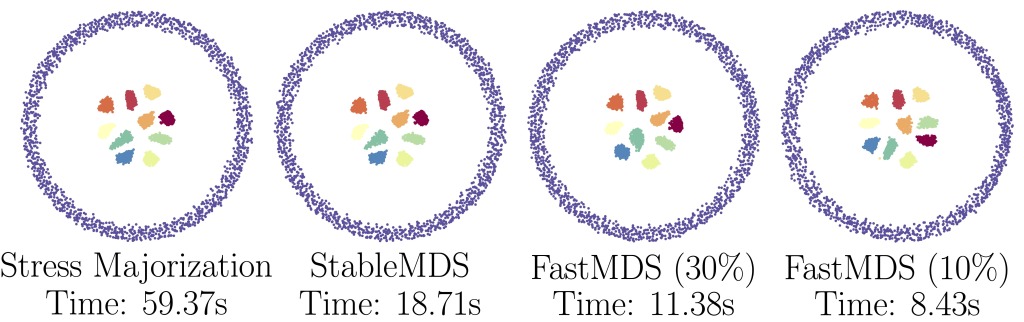

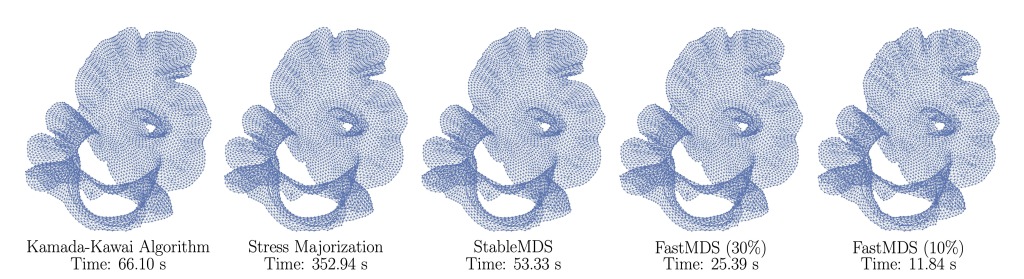

- Abstract: Multidimensional Scaling (MDS) is an essential technique in multivariate analysis, with Weighted MDS (WMDS) commonly employed for tasks such as dimensionality reduction and graph drawing. However, the optimization of WMDS poses significant challenges due to the highly non-convex nature of its objective function. Stress Majorization, a method classified under the Majorization-Minimization algorithm, is among the most widely used solvers for this problem because it guarantees non-increasing loss values during optimization, even with a non-convex objective function. Despite this advantage, Stress Majorization suffers from high computational complexity, specifically

per iteration, where

per iteration, where  denotes the number of data points, and

denotes the number of data points, and  represents the projection dimension, rendering it impractical for large-scale data analysis. To mitigate the computational challenge, we introduce StableMDS, a novel gradient descent-based method that reduces the computational complexity to

represents the projection dimension, rendering it impractical for large-scale data analysis. To mitigate the computational challenge, we introduce StableMDS, a novel gradient descent-based method that reduces the computational complexity to  per iteration. StableMDS achieves this computational efficiency by applying gradient descent independently to each point, thereby eliminating the need for costly matrix operations inherent in Stress Majorization. Furthermore, we theoretically ensure non-increasing loss values and optimization stability akin to Stress Majorization. These advancements not only enhance computational efficiency but also maintain stability, thereby broadening the applicability of WMDS to larger datasets.

per iteration. StableMDS achieves this computational efficiency by applying gradient descent independently to each point, thereby eliminating the need for costly matrix operations inherent in Stress Majorization. Furthermore, we theoretically ensure non-increasing loss values and optimization stability akin to Stress Majorization. These advancements not only enhance computational efficiency but also maintain stability, thereby broadening the applicability of WMDS to larger datasets. - Paper: Coming soon.

- Source code: GitHub.